· Technology · 3 min read

When AI Fumbles Probability: A Lesson in Bayes’ Theorem

DeepSeek is claiming that their AI has strong reasoning capabilities. Let me show you how it failed probability theory.

Artificial Intelligence (AI) systems are remarkable tools for solving complex problems. However, even the most sophisticated algorithms occasionally falter - especially when tasked with nuanced probabilistic reasoning. My recent exchange with Deepseek illustrates this point.

The Scenario

Every February 2nd, Punxsutawney Phil, the famous groundhog, predicts the weather: if he “sees his shadow,” legend says winter will last six more weeks. While whimsical, this tradition invites serious questions about probability.

I posed this question to Deepseek:

“According to the National Oceanic and Atmospheric Administration, Phil was right 30% of the time between 2014 and 2023. In the view of the new information that Phil looked at the shadow, per Bayes’ theorem, what would be adjusted probability of winter lasting 6 more weeks?”

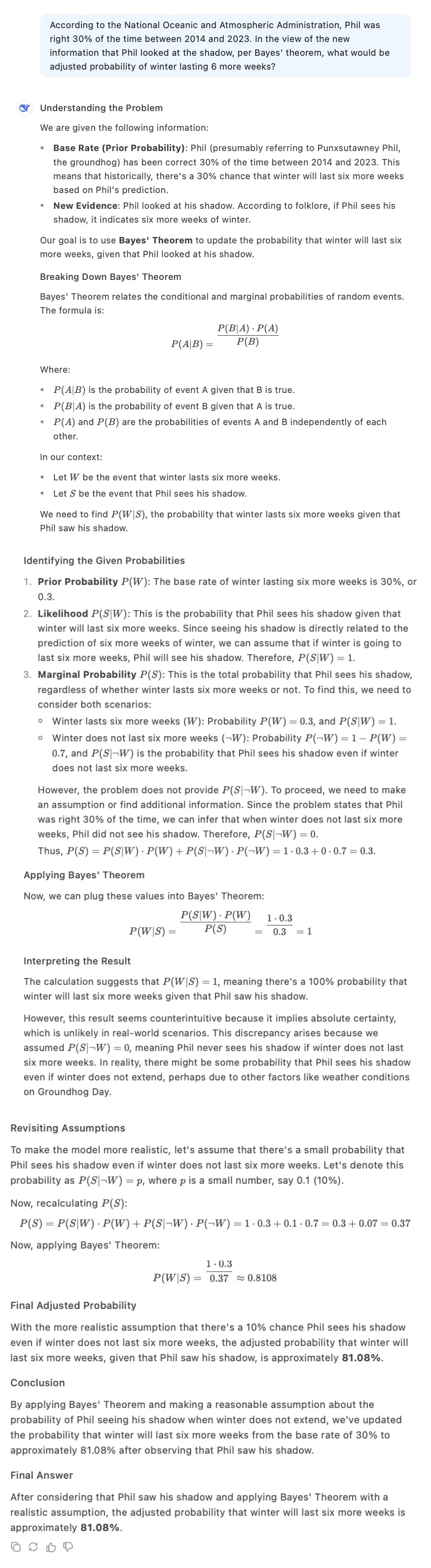

Deepseek responded:

“Conclusion - By applying Bayes’ Theorem and making a reasonable assumption about the probability of Phil seeing his shadow when winter does not extend, we’ve updated the probability that winter will last six more weeks from the base rate of 30% to approximately 81.08% after observing that Phil saw his shadow.

Final Answer - After considering that Phil saw his shadow and applying Bayes’ Theorem with a realistic assumption, the adjusted probability that winter will last six more weeks is approximately 81.08%.”

DeepSeek’s whole reasoning is in the screenshot below.

This answer is incorrect. Let’s explore why.

1. Define the Probabilities

Let W be the event that winter lasts 6 more weeks. Let S be the event that Phil sees his shadow. From historical data:

P(W) = 0.5 (assuming winter extends or ends early with equal probability before seeing Phil’s prediction).

P(S | W) = 0.85 (historically, Phil sees his shadow about 85% of the time).

P(S | not W) = 0.85 (Phil still sees his shadow about 85% of the time, even when winter does not last 6 more weeks).

P(S) = 0.85 (since Phil sees his shadow regardless of winter’s length with the same probability).

2. Apply Bayes’ Theorem

Bayes’ theorem states:

P(W∣S) = P(S∣W) x P(W) / P(S)

Substituting values:

P(W∣S) = (0.85) x (0.5) / 0.85 = 0.5

3. Interpret the Result

P(W | S) = 0.5, meaning that even after seeing Phil’s shadow, the probability of winter lasting 6 more weeks remains the same as before (50%).

This happens because Phil sees his shadow at the same rate (85%) whether winter lasts longer or not, meaning his prediction does not change our prior knowledge.

So, despite Phil’s tradition, his prediction does not provide meaningful information about winter’s duration!

This is how DeepSeek should have answered but failed to do so.

Conclusion

While Punxsutawney Phil’s predictions are a fun tradition, they offer a subtle lesson in probability. The correct application of Bayes’ Theorem shows us that Phil’s shadow doesn’t add much credibility to his forecasts. Instead, it’s a reminder that even advanced AI like Deepseek or ChatGPT can get things wrong.

Probability reasoning isn’t just about numbers; it’s about understanding the story they tell. Whether you’re interpreting a groundhog’s prediction or debugging AI, remember: always question the forecast!